Prime Minister Anthony Albanese and Leader of the Opposition Peter Dutton during the announcement of Australian Paralympic Team for the Paris 2024 Olympic Games at Parliament House in Canberra [Source: (AAP Image/Mick Tsikas]

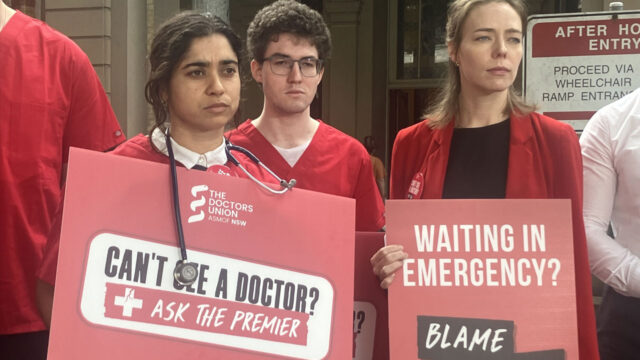

Deepfake political advertisements with videos pretending to be the prime minister or opposition leader will be allowed at the next federal election under contentious recommendations from an AI inquiry.

Voluntary rules about labelling AI content could be fast-tracked in time for the 2025 election and mandatory restrictions applied to political ads when they are ready.

The Adopting Artificial Intelligence inquiry issued the recommendations in its interim report on Friday, but failed to secure the endorsement of a majority of its members, with four out of six senators clashing over its content.

In a dissenting report, inquiry deputy chair Greens Senator David Shoebridge said the recommendations would allow deepfake political ads to “mislead voters or damage candidates’ reputations”.

Two coalition senators argued a rushed process would unfairly restrict freedom of speech.

The interim report comes after the AI inquiry was called in March to investigate risks and opportunities of the technology, and following six public hearings including testimony from academics, scientists, technology firms and social media companies.

Its recommendations included introducing laws to restrict or curtail deepfake political advertisements before the 2029 federal election.

The restrictions could apply to generative AI models, such as ChatGPT, Microsoft CoPilot and Google Gemini, as well as social media platforms.

Mandatory AI rules for high-risk settings should also apply to election material when they are introduced, the report said, and the government should increase its efforts to boost AI literacy, including among parliamentarians and government agencies.

The report also recommended the government introduce voluntary rules for labelling AI-generated content, with a code launched before the next federal election.

The recommendations were criticised by some members of the Senate inquiry, with Senator Shoebridge saying the interim report failed to propose “urgent remedies” needed to protect Australian democratic processes.

A temporary, targeted ban on political deepfakes should be introduced to help voters participating in the next federal election, he said.

“Under current laws, it would be legal to have a deepfake video pretending to be the prime minister or the opposition leader saying something they never, in fact, said as long as this is properly authorised under the Electoral Act,” Senator Shoebridge said.

“That falls well below community expectations of our electoral regulation.”

Independent Senator David Pocock said rules to outlaw the use of deepfake videos and voice clones would be critical before the next federal election and could be refined by the 2029 poll.

“Suggestions that we need to go slowly in the face of rapidly changing use of AI seem ill-advised,” he said.

“There should be a swift move to put laws in place ahead of the next federal election that rule out the use of generative AI.”

Coalition senators James McGrath and Linda Reynolds issued a dissenting report for different reasons, saying they would not support quick legislative reforms or measures to govern truth in political advertising.

They said Australia should only introduce restrictions on AI content after reviewing the laws and experience of the US election later in 2024.

“The coalition members of the committee are concerned that should the government introduce a rushed regulatory AI model with prohibitions on freedom of speech in an attempt to protect Australia’s democracy, that the cure will be worse than the disease,” their report said.

A consultation into mandatory rules for the use of AI in high-risk settings is being reviewed after submissions closed on October 4.

The AI inquiry’s final report is expected in November.

Stream the best of Fiji on VITI+. Anytime. Anywhere.